Note that the head is in a plexiglass box at the Museum of Fine Arts in Boston, MA. Can't remember whose head it is though.

Goal is to get a dense 3d reconstruction (photogrammetry) from those 5 images using SfM10 (Structure from Motion) and MVS10 (Multi-View Stereo). Using SfM10 to get the camera poses (in the form of a nvm file) is usually a no-brainer so I won't talk about it here. MVS10 is a bit more trickier as you have to balance accuracy and density of the reconstruction (number of 3d points), usually by playing with the max reprojection error.

Input to MVS10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 32

alpha (disparity map) = 0.9

color truncation (disparity map) = 20

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 2

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.1

Min image point number (low-confidence 3D points) = 3

Max reprojection error (low-confidence image points) = 8

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 5

I used a max reprojection error of 8.0 pixels instead of the usual 2.0 pixels to get more 3d points in the reconstruction.

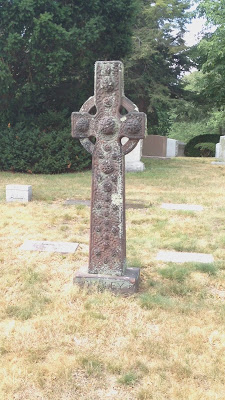

Here's the point cloud in sketchfab viewer: