In this post, I am gonna present three ways to preserve the colors of the original image when using Gatys' Neural Style algorithm.

Here are the content and style images:

Hmm, I didn't even realize there was a watermark on the style image. Should really have been cropped out but it doesn't really matter.

Experiment 1:

Let's use the content and style images as is and ask Gatys' Neural Style (Justin Johnson's implementation on github) to use the original colors (of the content image). Note that I am using a weight for the content image equal to 1. and a weight for the style image equal to 5. The rest is pretty standard.

Here are the Neural Style parameters used:

#!/bin/bash

th ../../neural_style.lua \

-style_image style_image.jpg \

-style_blend_weights nil \

-content_image content_image.jpg \

-image_size 512 \

-gpu -1 \

-content_weight 1. \

-style_weight 5. \

-tv_weight 1e-5 \

-num_iterations 1000 \

-normalize_gradients \

-init image \

-init_image content_image.jpg \

-optimizer lbfgs \

-learning_rate 1e1 \

-lbfgs_num_correction 0 \

-print_iter 50 \

-save_iter 100 \

-output_image new_content_image.jpg \

-style_scale 1.0 \

-original_colors 1 \

-pooling max \

-proto_file ../../models/VGG_ILSVRC_19_layers_deploy.prototxt \

-model_file ../../models/VGG_ILSVRC_19_layers.caffemodel \

-backend nn \

-seed -1 \

-content_layers relu4_2 \

-style_layers relu1_1,relu2_1,relu3_1,relu4_1,relu5_1

Yeah, it's not that great. I think we can do better.

Experiment 2:

Let's color match the style image to the content image and ask Gatys' Neural Style not to use the original colors. Note that I am using a weight for the content image equal to 1. and a weight for the style image equal to 5. The rest is pretty standard.

To color match the style image to the content image, I use my own software called thecolormatcher. It's very basic stuff.

Here are the Neural Style parameters used:

#!/bin/bash

th ../../neural_style.lua \

-style_image new_style_image.jpg \

-style_blend_weights nil \

-content_image content_image.jpg \

-image_size 512 \

-gpu -1 \

-content_weight 1. \

-style_weight 5. \

-tv_weight 1e-5 \

-num_iterations 1000 \

-normalize_gradients \

-init image \

-init_image content_image.jpg \

-optimizer lbfgs \

-learning_rate 1e1 \

-lbfgs_num_correction 0 \

-print_iter 50 \

-save_iter 100 \

-output_image new_content_image.jpg \

-style_scale 1.0 \

-original_colors 0 \

-pooling max \

-proto_file ../../models/VGG_ILSVRC_19_layers_deploy.prototxt \

-model_file ../../models/VGG_ILSVRC_19_layers.caffemodel \

-backend nn \

-seed -1 \

-content_layers relu4_2 \

-style_layers relu1_1,relu2_1,relu3_1,relu4_1,relu5_1

Let's change the weight for the style image from 5. to 20. in order to get more of the texture of the style image in the resulting image.

Here are the Neural Style parameters used:

#!/bin/bash

th ../../neural_style.lua \

-style_image new_style_image.jpg \

-style_blend_weights nil \

-content_image content_image.jpg \

-image_size 512 \

-gpu -1 \

-content_weight 1. \

-style_weight 20. \

-tv_weight 1e-5 \

-num_iterations 1000 \

-normalize_gradients \

-init image \

-init_image content_image.jpg \

-optimizer lbfgs \

-learning_rate 1e1 \

-lbfgs_num_correction 0 \

-print_iter 50 \

-save_iter 100 \

-output_image new_content_image.jpg \

-style_scale 1.0 \

-original_colors 0 \

-pooling max \

-proto_file ../../models/VGG_ILSVRC_19_layers_deploy.prototxt \

-model_file ../../models/VGG_ILSVRC_19_layers.caffemodel \

-backend nn \

-seed -1 \

-content_layers relu4_2 \

-style_layers relu1_1,relu2_1,relu3_1,relu4_1,relu5_1

Yeah, this method is pretty good at keeping the original colors but there are some colors that are lost, like the red on the lips.

Experiment 3:

Let's create luminance images for both the content image and the style image and ask Gatys' Neural Style not to use the original colors. Note that I am using a weight for the content image equal to 1. and a weight for the style image equal to 5. The rest is pretty standard.

To extract the luminance of the content image and style image, I use my own software called theluminanceextracter. To inject back the resulting luminance image into the content image, I also use my own software called theluminanceswapper. It's very basic stuff.

Here are the Neural Style parameters used:

#!/bin/bash

th ../../neural_style.lua \

-style_image style_luminance_image.jpg \

-style_blend_weights nil \

-content_image content_luminance_image.jpg \

-image_size 512 \

-gpu -1 \

-content_weight 1. \

-style_weight 5. \

-tv_weight 1e-5 \

-num_iterations 1000 \

-normalize_gradients \

-init image \

-init_image content_luminance_image.jpg \

-optimizer lbfgs \

-learning_rate 1e1 \

-lbfgs_num_correction 0 \

-print_iter 50 \

-save_iter 100 \

-output_image new_content_luminance_image.jpg \

-style_scale 1.0 \

-original_colors 0 \

-pooling max \

-proto_file ../../models/VGG_ILSVRC_19_layers_deploy.prototxt \

-model_file ../../models/VGG_ILSVRC_19_layers.caffemodel \

-backend nn \

-seed -1 \

-content_layers relu4_2 \

-style_layers relu1_1,relu2_1,relu3_1,relu4_1,relu5_1

Let's change the weight for the style image from 5. to 20. in order to get more of the texture of the style image in the resulting image.

Here are the Neural Style parameters used:

#!/bin/bash

th ../../neural_style.lua \

-style_image style_luminance_image.jpg \

-style_blend_weights nil \

-content_image content_luminance_image.jpg \

-image_size 512 \

-gpu -1 \

-content_weight 1. \

-style_weight 20. \

-tv_weight 1e-5 \

-num_iterations 1000 \

-normalize_gradients \

-init image \

-init_image content_luminance_image.jpg \

-optimizer lbfgs \

-learning_rate 1e1 \

-lbfgs_num_correction 0 \

-print_iter 50 \

-save_iter 100 \

-output_image new_content_luminance_image.jpg \

-style_scale 1.0 \

-original_colors 0 \

-pooling max \

-proto_file ../../models/VGG_ILSVRC_19_layers_deploy.prototxt \

-model_file ../../models/VGG_ILSVRC_19_layers.caffemodel \

-backend nn \

-seed -1 \

-content_layers relu4_2 \

-style_layers relu1_1,relu2_1,relu3_1,relu4_1,relu5_1

Yeah, this is probably the best method to preserve the original colors of the image. Method 2 is pretty good as well and probably easier to use as you don't have to bother with getting/swapping the luminance images.

If you are interested in trying to understand how Justin Johnson's Torch implementation of Gatys' Neural Style on github works, I made a post about it: Justin Johnson's Neural Style Torch Implementation Explained.

Automatic depth map generation, stereo matching, multi-view stereo, Structure from Motion (SfM), photogrammetry, 2d to 3d conversion, etc. Check the "3D Software" tab for my free 3d software. Turn photos into paintings like impasto oil paintings, cel shaded cartoons, or watercolors. Check the "Painting Software" tab for my image-based painting software. Problems running my software? Send me your input data and I will do it for you.

Monday, November 25, 2019

Monday, November 4, 2019

2d to 3d Image Conversion Software - the3dconverter2

The3dconverter2 is an attempt at making easier the conversion of a 2d image into a 3d image via the creation of a dense depthmap. I know there is already 2d to 3d Image Conversion Software - The 3d Converter but I think the idea of relative depth constraints is probably unnecessary, which is why the3dconverter2 does not have them. Unlike DMAG4 and DMAG11 which limit the propagation of assigned depths within to areas of similar colors, the3dconverter2 allows for totally free propagation of assigned depths. This means that the only way to prevent depths from propagating over object boundaries (at different depths) is to use a so-called "edge image" (also used by DMAG4).

After you have extracted the3dconverter2-x64.rar, you should see a directory called "joker". It contains everything you need to check that the software is running correctly on your machine (windows 64 bit). The file called "the3dconverter2.bat" is the one you double-click to run the software. It tells windows where the executable is. You need to edit the file so that the location of the executable is the correct one for your installation. I use notepad++ to edit text files but I am sure you can use notepad or wordpad with equal success. If you want to use the3dconverter2 on your own photograph, create a directory alongside "joker" (in another words, at the same level as "joker"), copy "the3dconverter2.bat" and "the3dconverter2_input.txt" from "joker" to that new directory and double-click "the3dconverter2.bat" after you have properly created all the input files the3dconverter2 needs.

The file "joker.xcf" is a gimp file that contains (as layers) content_image.png (the reference 2d image) and edge_rgba_image.png (the so-called edge image). It also contains the gimp paths for the depth constraints.

The input file "the3dconverter2_input.txt" contains the following lines:

content_image.png

sparse_depthmap_rgba_image.png

dense_depthmap_image.png

gimp_paths.svg

diffusion_direction_rgba_image.png

edge_rgba_image.png

Line 1 contains the name of the content rgb image. The file must exist.

Line 2 contains the name of the sparse depthmap rgba image. The file may or may not exist.

Line 3 contains the name of the dense depthmap image. The file will be created by the3dconverter2.

Line 4 contains the name of the gimp paths giving depth constraints. If the file does not exist, the3dconverter2 assumes there are no depth constraints given as gimp paths. In this case, there should be a sparse depthmap rgba image given.

Line 5 contains the name of the diffusion direction rgba image. If the file doesn't exist, the3dconverter2 assumes there are no regions where the diffusion direction is given. This is something I was working on but you can safely ignore this capability.

Line 6 contains the name of the edge rgba image. If the file doesn't exist, the3dconverter assumes there is no edge image.

The content rgb image is of course the image for which a dense depthmap is to be created. It is recommended not to use mega-large images as it may put an heavy strain on the solver (memory-wise). I always recommend starting small. Here, the reference rgb image is only 600 pixels wide. If the goal is to create 3d wiggles/wobbles or facebook 3d photos, it is more than enough. If you see that your machine can handle it (it doesn't take too long to solve and the memory usage is reasonable), then you can switch to larger reference rgb images.

The sparse depthmap rgba image is created just like with Depth Map Automatic Generator 4 (DMAG4) or Depth Map Automatic Generator 11 (DMAG11). You use the "Pencil Tool" (no anti-aliasing) to draw depth clues on a transparent layer. Depths can vary from pure black (deepest background) to pure white (closest foreground). When you zoom, you should see either fully transparent pixels or fully opaque pixels because, hopefully, all the tools you have used do not create anti-aliasing. If, for some reason, there are semi-transparent pixels in your sparse depthmap, click on Layer->Transparency->Threshold Alpha and then press Ok to remove the semi-transparent pixels. Note that you absolutely do not need to create a sparse depthmap rgba image to get the3dconverter2 going.

Gimp paths are used to impose constraints on pixel depths. You use gimp paths to indicate that one pixel should be at a specified depth. If you don't give gimp paths (no gimp paths file present), the3dconverter2 relies solely on the sparse depth map (and possibly the edge image if you provide one) to generate the dense depth map and behaves just like Depth Map Automatic Generator 4 (DMAG4). The name of a gimp path stores the depth at which the pixels making up the gimp path should be. The name of a gimp path must start with 3 digits ranging from 000 (pure black - furthest) to 255 (pure white - closest). For example, if the name of a gimp path is "146-blah blah blah", the pixels that make up the gimp path are supposed to be at a depth equal to 146. What is cool about this system is that it is quite simple to change the depth for a given gimp path: simply change the first 3 digits. To be perfectly clear, all pixels that make up a gimp path receive the depth assigned to the gimp path, not just the starting and ending pixel of each gimp path component.

When you are working with gimp paths, you should always have the Paths dialog window within easy reach. To get to it, click on Windows->Dockable Dialogs->Paths. Once you have created a gimp path and are done with it, it will show up in the Paths dialog window as "Unnamed" but will not be visible. To make it visible, just do the same thing you would do for layers (click to the left of the name until the eye shows up). After you create a gimp path (using the "Paths Tool"), gimp automatically names it "Unnamed". You can assign a depth to the gimp path by double-clicking on the path name and changing it to whatever depth you want the path to be.

To save the gimp paths, right-click the active path and select "Export Gimp Path". Make sure to select "Export all paths from this image" in the drop-down menu before saving. This will save all gimp paths into a single SVG file, eg, "gimp_paths.svg".

It's probably a good idea at this point to explain how to create/edit paths in gimp:

To create a new path, click on "Paths Tool". In the " Tool Options", check the " Polygonal" box as we will only need to create paths that are polygonal (no Bezier curves needed here). Click (left) to create the first anchor.

To add an anchor, click (left). To close a path (you really don't need to do that here but it doesn't hurt to know), press CTRL and hover the cursor over the starting anchor and click (left).

To start a new path component (you really don't need to do that here but it doesn't hurt to know), pres SHIFT and click (left).

To move an anchor, hover the cursor over an existing anchor, click (left) and drag.

To insert an anchor, press CTRL and click (left) anywhere on the segment betwen two existing anchors.

To delete an anchor, press SHIFT+CTRL and click (left) on the anchor you want to delete.

The edge rgba image tells the3dconverted not to diffuse the depths past the pixels that are not transparent in the edge image. It is similar to the edge image used in dmag4. To create an edge rgba image, create a new layer and trace along the object boundaries in the reference image. An object boundary is basically a boundary between two objects at different depths. To trace the edge image, I recommend using the " Pencil Tool" with a hard brush (no anti-aliasing) of size 1 (smallest possible). Press SHIFT and click (left) to draw line segments. I usually use the color "red" for my edge images but you can use whatever color you want. Make sure to always use tools that are not anti-aliased! If you need to use the "Eraser Tool", check the "Hard Edge" in the tool options. When you zoom on your edge image, it should look like crisp staircases.

The white pixels are actually transparent (checkerboard pattern in gimp).

The following screen grab shows all the layers contained in content_image.xcf:

The following screen grab shows all the gimp paths contained in content_image.xcf:

As you can see, the names of the gimp paths all start with 3 digits ranging from 000 to 255. The name of the gimp path indicates its depth.

Once you are done with the editing in gimp, simply save the layer containing the sparse depthmap as sparse_depthmap_rgba_image.png (if you have one), the layer containing the edge image as edge_rgba_image.png, and the gimp paths as gimp_paths.svg. Double-click on "the3dconverter2.bat" and the3dconverter2 should spit out the dense depthmap in no time as dense_depthmap_image.png assuming nothing went wrong.

This is the dense depth map generated by the3dconverter2:

To see if the dense depthmap is good, you can use Depth Player. To create a wiggle/wobble, you can use Wiggle Maker. If your goal is solely to create wiggles, the depth map really doesn't need to be too accurate and depths not in the immediate foreground really do not have to be too accurate (only worry about the foreground objects).

Any problems? Send me your .xcf gimp file (zipped)! If the file is big, please use something like wetransfer to send it me or make it available via google drive.

I made a video on youtube that explains the whole 2d to 3d image conversion process from start to finish, kinda:

Here's another video tutorial that's much more in-depth but kinda assumes you already know how to install and run the3dconverter2:

Tips and tricks:

- I think it's a good idea to order the paths from foreground to background in the "Paths" dockable window. It's a little bit less confusing especially if you have tons of them.

- Use depthplayer to check your work often.

Download link (for windows 64 bit): the3dconverter2-x64.rar

Source code: the3dconverter2 on github.

After you have extracted the3dconverter2-x64.rar, you should see a directory called "joker". It contains everything you need to check that the software is running correctly on your machine (windows 64 bit). The file called "the3dconverter2.bat" is the one you double-click to run the software. It tells windows where the executable is. You need to edit the file so that the location of the executable is the correct one for your installation. I use notepad++ to edit text files but I am sure you can use notepad or wordpad with equal success. If you want to use the3dconverter2 on your own photograph, create a directory alongside "joker" (in another words, at the same level as "joker"), copy "the3dconverter2.bat" and "the3dconverter2_input.txt" from "joker" to that new directory and double-click "the3dconverter2.bat" after you have properly created all the input files the3dconverter2 needs.

The file "joker.xcf" is a gimp file that contains (as layers) content_image.png (the reference 2d image) and edge_rgba_image.png (the so-called edge image). It also contains the gimp paths for the depth constraints.

The input file "the3dconverter2_input.txt" contains the following lines:

content_image.png

sparse_depthmap_rgba_image.png

dense_depthmap_image.png

gimp_paths.svg

diffusion_direction_rgba_image.png

edge_rgba_image.png

Line 1 contains the name of the content rgb image. The file must exist.

Line 2 contains the name of the sparse depthmap rgba image. The file may or may not exist.

Line 3 contains the name of the dense depthmap image. The file will be created by the3dconverter2.

Line 4 contains the name of the gimp paths giving depth constraints. If the file does not exist, the3dconverter2 assumes there are no depth constraints given as gimp paths. In this case, there should be a sparse depthmap rgba image given.

Line 5 contains the name of the diffusion direction rgba image. If the file doesn't exist, the3dconverter2 assumes there are no regions where the diffusion direction is given. This is something I was working on but you can safely ignore this capability.

Line 6 contains the name of the edge rgba image. If the file doesn't exist, the3dconverter assumes there is no edge image.

The content rgb image is of course the image for which a dense depthmap is to be created. It is recommended not to use mega-large images as it may put an heavy strain on the solver (memory-wise). I always recommend starting small. Here, the reference rgb image is only 600 pixels wide. If the goal is to create 3d wiggles/wobbles or facebook 3d photos, it is more than enough. If you see that your machine can handle it (it doesn't take too long to solve and the memory usage is reasonable), then you can switch to larger reference rgb images.

The sparse depthmap rgba image is created just like with Depth Map Automatic Generator 4 (DMAG4) or Depth Map Automatic Generator 11 (DMAG11). You use the "Pencil Tool" (no anti-aliasing) to draw depth clues on a transparent layer. Depths can vary from pure black (deepest background) to pure white (closest foreground). When you zoom, you should see either fully transparent pixels or fully opaque pixels because, hopefully, all the tools you have used do not create anti-aliasing. If, for some reason, there are semi-transparent pixels in your sparse depthmap, click on Layer->Transparency->Threshold Alpha and then press Ok to remove the semi-transparent pixels. Note that you absolutely do not need to create a sparse depthmap rgba image to get the3dconverter2 going.

Gimp paths are used to impose constraints on pixel depths. You use gimp paths to indicate that one pixel should be at a specified depth. If you don't give gimp paths (no gimp paths file present), the3dconverter2 relies solely on the sparse depth map (and possibly the edge image if you provide one) to generate the dense depth map and behaves just like Depth Map Automatic Generator 4 (DMAG4). The name of a gimp path stores the depth at which the pixels making up the gimp path should be. The name of a gimp path must start with 3 digits ranging from 000 (pure black - furthest) to 255 (pure white - closest). For example, if the name of a gimp path is "146-blah blah blah", the pixels that make up the gimp path are supposed to be at a depth equal to 146. What is cool about this system is that it is quite simple to change the depth for a given gimp path: simply change the first 3 digits. To be perfectly clear, all pixels that make up a gimp path receive the depth assigned to the gimp path, not just the starting and ending pixel of each gimp path component.

When you are working with gimp paths, you should always have the Paths dialog window within easy reach. To get to it, click on Windows->Dockable Dialogs->Paths. Once you have created a gimp path and are done with it, it will show up in the Paths dialog window as "Unnamed" but will not be visible. To make it visible, just do the same thing you would do for layers (click to the left of the name until the eye shows up). After you create a gimp path (using the "Paths Tool"), gimp automatically names it "Unnamed". You can assign a depth to the gimp path by double-clicking on the path name and changing it to whatever depth you want the path to be.

To save the gimp paths, right-click the active path and select "Export Gimp Path". Make sure to select "Export all paths from this image" in the drop-down menu before saving. This will save all gimp paths into a single SVG file, eg, "gimp_paths.svg".

It's probably a good idea at this point to explain how to create/edit paths in gimp:

To create a new path, click on "Paths Tool". In the " Tool Options", check the " Polygonal" box as we will only need to create paths that are polygonal (no Bezier curves needed here). Click (left) to create the first anchor.

To add an anchor, click (left). To close a path (you really don't need to do that here but it doesn't hurt to know), press CTRL and hover the cursor over the starting anchor and click (left).

To start a new path component (you really don't need to do that here but it doesn't hurt to know), pres SHIFT and click (left).

To move an anchor, hover the cursor over an existing anchor, click (left) and drag.

To insert an anchor, press CTRL and click (left) anywhere on the segment betwen two existing anchors.

To delete an anchor, press SHIFT+CTRL and click (left) on the anchor you want to delete.

The edge rgba image tells the3dconverted not to diffuse the depths past the pixels that are not transparent in the edge image. It is similar to the edge image used in dmag4. To create an edge rgba image, create a new layer and trace along the object boundaries in the reference image. An object boundary is basically a boundary between two objects at different depths. To trace the edge image, I recommend using the " Pencil Tool" with a hard brush (no anti-aliasing) of size 1 (smallest possible). Press SHIFT and click (left) to draw line segments. I usually use the color "red" for my edge images but you can use whatever color you want. Make sure to always use tools that are not anti-aliased! If you need to use the "Eraser Tool", check the "Hard Edge" in the tool options. When you zoom on your edge image, it should look like crisp staircases.

The white pixels are actually transparent (checkerboard pattern in gimp).

The following screen grab shows all the layers contained in content_image.xcf:

The following screen grab shows all the gimp paths contained in content_image.xcf:

As you can see, the names of the gimp paths all start with 3 digits ranging from 000 to 255. The name of the gimp path indicates its depth.

Once you are done with the editing in gimp, simply save the layer containing the sparse depthmap as sparse_depthmap_rgba_image.png (if you have one), the layer containing the edge image as edge_rgba_image.png, and the gimp paths as gimp_paths.svg. Double-click on "the3dconverter2.bat" and the3dconverter2 should spit out the dense depthmap in no time as dense_depthmap_image.png assuming nothing went wrong.

This is the dense depth map generated by the3dconverter2:

To see if the dense depthmap is good, you can use Depth Player. To create a wiggle/wobble, you can use Wiggle Maker. If your goal is solely to create wiggles, the depth map really doesn't need to be too accurate and depths not in the immediate foreground really do not have to be too accurate (only worry about the foreground objects).

Any problems? Send me your .xcf gimp file (zipped)! If the file is big, please use something like wetransfer to send it me or make it available via google drive.

I made a video on youtube that explains the whole 2d to 3d image conversion process from start to finish, kinda:

Here's another video tutorial that's much more in-depth but kinda assumes you already know how to install and run the3dconverter2:

Tips and tricks:

- I think it's a good idea to order the paths from foreground to background in the "Paths" dockable window. It's a little bit less confusing especially if you have tons of them.

- Use depthplayer to check your work often.

Download link (for windows 64 bit): the3dconverter2-x64.rar

Source code: the3dconverter2 on github.

Saturday, October 5, 2019

Linux executables

I am gonna attempt to explain how to use (some of) my software on linux. First, you need to locate the downloadable archives that are available for linux on the 3d software page. If the particular piece of software you are interested in exists for linux, then you are in luck. If it does not exist, then let me know and I will try to make it available on linux for you.

Now, I am assuming you have installed a fairly recent 64-bit distribution of linux on your computer and that you have installed gcc using your favorite package manager. You will need to have gcc version 5.4.0 or newer installed. If you have no idea how to do that, open you favorite packager like the Synaptic package manager, click on "Development", search for "g++" and click on the "g++" package (should be at the top of the list). Click "Apply" and Bob's your uncle. Since gcc 5.4.0 is pretty old (2016), you should have no problem running my software with a recent ubuntu linux install.

Let's say you want to run Depth Map Automatic Generator 5 (DMAG5) on linux. You are in luck as it is part of ugosoft3d-5-nogui-x86_64-linux.tar.gz.

After you have extracted the ugosoft3d-5-x86_64-linux.tar.gz archive (using gunzip and tar), you should have something like:

- dmag5 (that's the dmag5 executable)

- dmag5_test (that's a convenient directory to test dmag5)

- dmag5b (that's the dmag5b executable)

- dmag5b_test (that's a convenient directory to test dmag5b)

- a bunch of shared libraries that you may or may not need depending on your linux install

The way to run dmag5 is to go into the dmag5_test directory, type "./../dmag5" and press return in the terminal (assumed to be a bash shell). If you get an error about some shared library, type "LD_LIBRARY_PATH=./.." and press return. Here, I am simply indicating that the shared libs are located one directory above. If you have put the shared libraries somewhere else, just put the proper directory. Then, type "export LD_LIBRARY_PATH" and press return. The executable should run without problems now. It is very likely you will get an error about the libpng12.so.0 shared library that was used to link the exec as it's quite old. Of course, you can put all those linux commands into a bash shell script, for convenience.

Same idea for dmag5b.

To get some explanation about what the parameters in the "*_input.txt" (where * is executable name) mean, you can either download the 64-bit version of the executable via the 3d software page and look at "*_manual.pdf" (it usually exists and is up to date) or go to the web page that describes the executable again via the 3d software page.

Now, I am assuming you have installed a fairly recent 64-bit distribution of linux on your computer and that you have installed gcc using your favorite package manager. You will need to have gcc version 5.4.0 or newer installed. If you have no idea how to do that, open you favorite packager like the Synaptic package manager, click on "Development", search for "g++" and click on the "g++" package (should be at the top of the list). Click "Apply" and Bob's your uncle. Since gcc 5.4.0 is pretty old (2016), you should have no problem running my software with a recent ubuntu linux install.

Let's say you want to run Depth Map Automatic Generator 5 (DMAG5) on linux. You are in luck as it is part of ugosoft3d-5-nogui-x86_64-linux.tar.gz.

After you have extracted the ugosoft3d-5-x86_64-linux.tar.gz archive (using gunzip and tar), you should have something like:

- dmag5 (that's the dmag5 executable)

- dmag5_test (that's a convenient directory to test dmag5)

- dmag5b (that's the dmag5b executable)

- dmag5b_test (that's a convenient directory to test dmag5b)

- a bunch of shared libraries that you may or may not need depending on your linux install

The way to run dmag5 is to go into the dmag5_test directory, type "./../dmag5" and press return in the terminal (assumed to be a bash shell). If you get an error about some shared library, type "LD_LIBRARY_PATH=./.." and press return. Here, I am simply indicating that the shared libs are located one directory above. If you have put the shared libraries somewhere else, just put the proper directory. Then, type "export LD_LIBRARY_PATH" and press return. The executable should run without problems now. It is very likely you will get an error about the libpng12.so.0 shared library that was used to link the exec as it's quite old. Of course, you can put all those linux commands into a bash shell script, for convenience.

Same idea for dmag5b.

To get some explanation about what the parameters in the "*_input.txt" (where * is executable name) mean, you can either download the 64-bit version of the executable via the 3d software page and look at "*_manual.pdf" (it usually exists and is up to date) or go to the web page that describes the executable again via the 3d software page.

Friday, September 6, 2019

Justin Johnson's Neural Style Torch Implementation Explained

If you have found yourself scratching your head trying to understand the torch implementation of the paper "A Neural Algorithm of Artistic Style" by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge written by Justin Johnson in github, help is on the way in the form of the following technical note:

Justin Johnson's Neural Style Torch Implementation Explained

It assumes you have read (a few times) either "A neural algorithm of artistic style" by Gatys, Leon A and Ecker, Alexander S and Bethge, Matthias or "Image style transfer using convolutional neural networks" by Gatys, Leon A and Ecker, Alexander S and Bethge, Matthias. Note that the latter is actually the better paper.

By the way, here is an example of what Neural Style can do to transfer the texture from style image to content image:

Note that I am mostly interested in texture transfer without color transfer, in other words, I always want the colors of the content image to remain. The only thing that I want to be transferred is the texture of the style image. This is personal preference as I really like to see the brush strokes.

Justin Johnson's Neural Style Torch Implementation Explained

It assumes you have read (a few times) either "A neural algorithm of artistic style" by Gatys, Leon A and Ecker, Alexander S and Bethge, Matthias or "Image style transfer using convolutional neural networks" by Gatys, Leon A and Ecker, Alexander S and Bethge, Matthias. Note that the latter is actually the better paper.

By the way, here is an example of what Neural Style can do to transfer the texture from style image to content image:

Note that I am mostly interested in texture transfer without color transfer, in other words, I always want the colors of the content image to remain. The only thing that I want to be transferred is the texture of the style image. This is personal preference as I really like to see the brush strokes.

Wednesday, August 7, 2019

Photogrammetry - Gordon's family in front of garage

My good friend Gordon sent me a set of 5 pictures taken with an iphone 4s and asked for a 3d reconstruction with as many points as possible using Structure from Motion 10 (SfM10) and Multi View Stereo 10 (MVS10).

step 1 is structure from motion using sfm10. The purpose of sfm10 is to compute the camera positions and orientations corresponding to each input image. It also output a very coarse 3d construction.

Input file for sfm10:

Number of cameras = 5

Image name for camera 0 = IMG_0071.JPG

Image name for camera 1 = IMG_0076.JPG

Image name for camera 2 = IMG_0078.JPG

Image name for camera 3 = IMG_0083.JPG

Image name for camera 4 = IMG_0100.JPG

Focal length = 2800

initial camera pair = 0 2

Number of trials (good matches) = 10000

Max number of iterations (Bundle Adjustment) = 1000

Min separation angle (low-confidence 3D points) = 0.1

Max reprojection error (low-confidence 3D points) = 10

Radius (animated gif frames) = 5

Angle amplitude (animated gif frames) = 1

It's the same as the input file that's in the sfm10_test directory except for the focal length. The focal length needs to be adjusted depending on the size of the images, here 2448x3264. I chose a focal length equal to 2800 (something that's about the same as the width/height). You could use different focal lengths in the same ballpark and it wouldn't make much of a difference (until you go too far and end up with sfm10 issuing errors about the initial camera pair).

Output from sfm10 (the last few bits):

Number of 3D points = 2547

Average reprojection error = 1.13272

Max reprojection error = 13.0476

Adding camera 3 to the 3D reconstruction ... done.

Looking for next camera to add to 3D reconstruction ...

Looking for next camera to add to 3D reconstruction ... done.

No more cameras to add to the 3D reconstruction

Average depth = 8.197 min depth = 1.43428 max depth = 44.7613

Step 2 is multi-view stereo with mvs10 to actuall build the dense 3d reconstruction using the results from sfm10.

Input for mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 4

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.1

Max reprojection error (low-confidence image points) = 10

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

This is pretty much the same as the input file in mvs10_test directory. I did change a few things though. For the radius (disparity map), I used 16 instead of 32 for no good reason really. For the sampling step (dense reconstruction), I used 1 instead of 2 so that the number of 3d points would be as large as possible.

Output from mvs10 (the last bits):

Number of 3D points = 1626906

Average reprojection error = 1.59341

Max reprojection error = 12.6571

Now, having a 3d wobble that looks alright doesn't mean the 3d construction is as good as it could be since you may have bad 3d points, mostly outliers that stretch the depth of the 3d scene way too much. You can see that when loading the point cloud (duh.ply) into sketchfab (make sure to zip the file before uploading to sketchfab in order not to hit the max upload limit which is 50 mb), meshlab, or cloudcompare. If you can't even zoom onto what you think the 3d scene should look like, you have outliers and it's time to tighten the parameters that relate to the low-confidence 3d points or image points. First, you need to make sure "Min image point number (low-confidence 3D points)" is greater or equal to 3. I made sure of that so let's move on. You may want to increase "Min separation angle (low-confidence 3D points)", say, from 0.1 to 0.5. You may also want to decrease "Max reprojection error (low-confidence image points)", say, from 10.0 to 2.0. Let's do just that and see what happens and rerun mvs10. Note that mvs10 should run much faster as it doesn't have to compute the depth maps (saved in the .mvs files).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 4

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.5

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output to mvs10 (the last bits):

Number of 3D points = 1114293

Average reprojection error = 0.837404

Max reprojection error = 2.58606

Of course, the number of 3d points has decreased but the 3d reconstruction should be more accurate.

If you see stepping in the 3d reconstruction (depth jumps), it's probably because of the "downsampling factor (disparity map)" is too large. You can clearly see that when you load up the cloud point that's in the mvs10_test directory (assuming the "downsampling factor (disparity map)" was not changed and is still equal to 4). If you change it from 4 to 2, the depth maps should have more grayscale values (and therefore there will be more possible depth values for the 3d points) but it's gonna take 4 times longer to run mvs10. Note that if you change the "downsampling factor (disparity map)", you need to delete the *.mvs files from your directory to force mvs10 to recompute the depth maps. Once mvs10 completes and you want to later change parameters related to the low-confidence 3d or image points, mvs10 will run much faster as it doesn't have to recompute the depth maps. Anyways, let's rerun mvs10 using "downsampling factor (disparity map) = 2" ans see what happens (remember to remove the .sms files prior).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 2

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.5

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output of mvs10 (the last bits):

Number of 3D points = 1346440

Average reprojection error = 0.789732

Max reprojection error = 2.7325

Just for fun, let's increase "Min separation angle (low-confidence 3D points)" from 0.5 to 1.0 and rerun mvs10 (without deleting the .mvs files, of course).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 2

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 1

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output of mvs10 (the last bits):

Number of 3D points = 1333404

Average reprojection error = 0.78909

Max reprojection error = 2.73256

Note that the parameters for sfm10 and mvs10 are explained in sfm10_manual.pdf and mvs10_manual.pdf, respectively, which should be sitting in the directory where you extracted the archive ugosoft3d-10-x64.rar.

step 1 is structure from motion using sfm10. The purpose of sfm10 is to compute the camera positions and orientations corresponding to each input image. It also output a very coarse 3d construction.

Input file for sfm10:

Number of cameras = 5

Image name for camera 0 = IMG_0071.JPG

Image name for camera 1 = IMG_0076.JPG

Image name for camera 2 = IMG_0078.JPG

Image name for camera 3 = IMG_0083.JPG

Image name for camera 4 = IMG_0100.JPG

Focal length = 2800

initial camera pair = 0 2

Number of trials (good matches) = 10000

Max number of iterations (Bundle Adjustment) = 1000

Min separation angle (low-confidence 3D points) = 0.1

Max reprojection error (low-confidence 3D points) = 10

Radius (animated gif frames) = 5

Angle amplitude (animated gif frames) = 1

It's the same as the input file that's in the sfm10_test directory except for the focal length. The focal length needs to be adjusted depending on the size of the images, here 2448x3264. I chose a focal length equal to 2800 (something that's about the same as the width/height). You could use different focal lengths in the same ballpark and it wouldn't make much of a difference (until you go too far and end up with sfm10 issuing errors about the initial camera pair).

Output from sfm10 (the last few bits):

Number of 3D points = 2547

Average reprojection error = 1.13272

Max reprojection error = 13.0476

Adding camera 3 to the 3D reconstruction ... done.

Looking for next camera to add to 3D reconstruction ...

Looking for next camera to add to 3D reconstruction ... done.

No more cameras to add to the 3D reconstruction

Average depth = 8.197 min depth = 1.43428 max depth = 44.7613

Step 2 is multi-view stereo with mvs10 to actuall build the dense 3d reconstruction using the results from sfm10.

Input for mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 4

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.1

Max reprojection error (low-confidence image points) = 10

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

This is pretty much the same as the input file in mvs10_test directory. I did change a few things though. For the radius (disparity map), I used 16 instead of 32 for no good reason really. For the sampling step (dense reconstruction), I used 1 instead of 2 so that the number of 3d points would be as large as possible.

Output from mvs10 (the last bits):

Number of 3D points = 1626906

Average reprojection error = 1.59341

Max reprojection error = 12.6571

Now, having a 3d wobble that looks alright doesn't mean the 3d construction is as good as it could be since you may have bad 3d points, mostly outliers that stretch the depth of the 3d scene way too much. You can see that when loading the point cloud (duh.ply) into sketchfab (make sure to zip the file before uploading to sketchfab in order not to hit the max upload limit which is 50 mb), meshlab, or cloudcompare. If you can't even zoom onto what you think the 3d scene should look like, you have outliers and it's time to tighten the parameters that relate to the low-confidence 3d points or image points. First, you need to make sure "Min image point number (low-confidence 3D points)" is greater or equal to 3. I made sure of that so let's move on. You may want to increase "Min separation angle (low-confidence 3D points)", say, from 0.1 to 0.5. You may also want to decrease "Max reprojection error (low-confidence image points)", say, from 10.0 to 2.0. Let's do just that and see what happens and rerun mvs10. Note that mvs10 should run much faster as it doesn't have to compute the depth maps (saved in the .mvs files).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 4

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.5

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output to mvs10 (the last bits):

Number of 3D points = 1114293

Average reprojection error = 0.837404

Max reprojection error = 2.58606

Of course, the number of 3d points has decreased but the 3d reconstruction should be more accurate.

If you see stepping in the 3d reconstruction (depth jumps), it's probably because of the "downsampling factor (disparity map)" is too large. You can clearly see that when you load up the cloud point that's in the mvs10_test directory (assuming the "downsampling factor (disparity map)" was not changed and is still equal to 4). If you change it from 4 to 2, the depth maps should have more grayscale values (and therefore there will be more possible depth values for the 3d points) but it's gonna take 4 times longer to run mvs10. Note that if you change the "downsampling factor (disparity map)", you need to delete the *.mvs files from your directory to force mvs10 to recompute the depth maps. Once mvs10 completes and you want to later change parameters related to the low-confidence 3d or image points, mvs10 will run much faster as it doesn't have to recompute the depth maps. Anyways, let's rerun mvs10 using "downsampling factor (disparity map) = 2" ans see what happens (remember to remove the .sms files prior).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 2

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 0.5

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output of mvs10 (the last bits):

Number of 3D points = 1346440

Average reprojection error = 0.789732

Max reprojection error = 2.7325

Just for fun, let's increase "Min separation angle (low-confidence 3D points)" from 0.5 to 1.0 and rerun mvs10 (without deleting the .mvs files, of course).

Input to mvs10:

nvm file = duh.nvm

Min match number (camera pair selection) = 100

Max mean vertical disparity error (camera pair selection) = 1

Min average separation angle (camera pair selection) = 0.1

radius (disparity map) = 16

alpha (disparity map) = 0.9

color truncation (disparity map) = 30

gradient truncation (disparity map) = 10

epsilon = 255^2*10^-4 (disparity map)

disparity tolerance (disparity map)= 0

downsampling factor (disparity map)= 2

sampling step (dense reconstruction)= 1

Min separation angle (low-confidence 3D points) = 1

Max reprojection error (low-confidence image points) = 2

Min image point number (low-confidence 3D points) = 3

Radius (animated gif frames) = 1

Angle amplitude (animated gif frames) = 1

Output of mvs10 (the last bits):

Number of 3D points = 1333404

Average reprojection error = 0.78909

Max reprojection error = 2.73256

Note that the parameters for sfm10 and mvs10 are explained in sfm10_manual.pdf and mvs10_manual.pdf, respectively, which should be sitting in the directory where you extracted the archive ugosoft3d-10-x64.rar.

Tuesday, May 21, 2019

A quick guide on using StereoPhoto Maker (SPM) to generate depth maps

This post describes how to automatically generate depth maps with StereoPhoto Maker. Masuji Suto, the author of StereoPhoto Maker, has integrated two of my tools, DMAG5 and DMAG9b, into StereoPhoto Maker in order for StereoPhoto Maker to be able to generate depth maps. If you don't want to use StereoPhoto Maker at all to generate depth maps, you can certainly use my tools directly: Epipolar Rectification 9b (ER9b) or Epipolar Rectification 9c (ER9c) to rectify/align the stereo pair, Depth Map Automatic Generator 5 (DMAG5) or Depth Map Automatic Generator 5b (DMAG5b) to generate the (initial) depth map, and Depth Map Automatic Generator 9b (DMAG9b) to improve the depth map. All those programs can be downloaded through the 3D Software Page.

I recommend using my stereo tools directly but I understand the convenience StereoPhoto Maker might offer, which is why I wrote this post. The issue I have with StereoPhoto Maker is the rectifier which not only aligns the stereo pair but also can provide the min and max disparities needed by the automatic depth map generator. I have no control over that part in StereoPhoto Maker and that bothers me a little bit. Just be aware that, to get good depth maps, it is quite important to have a properly rectified stereo pair and correct min and max disparities.

Be extremely careful about accepting the min and max disparity values (aka the background and foreground values) that StereoPhoto Maker can automatically provide! I strongly recommend you do that part manually. If the values differ a lot (the ones found automatically and the ones found manually), I would be quite suspicious about the proper rectification/alignment of the stereo pair. In that case, I would suggest using ER9b or ER9c to rectify, or if you are really keen on using StereoPhoto Maker, checking the "Better Precision (slow)" box under "Edit->Preferences->Adjustment" to get better rectification. Also, be extremely careful about StereoPhoto Maker resizing your stereo pair because of what's in "maximum image width"! I would not let StereoPhoto Maker resize the stereo pair and instead make sure that the stereo pair you are processing is under what's in "maximum image width".

Alrighty then, let's get back to the business hand, that is, generating depth maps with StereoPhoto Maker. I am assuming that you have installed StereoPhoto Maker and the combo DMAG5/DMAG9b on your computer. If you haven't done so yet, follow the instructions in How to make Facebook 3D Photo from Stereo pair. I am assuming you have downloaded and extracted the DMAG5/DMAG9b stuff in a directory called dmag5_9. That directory should look like so:

Because alignment of the stereo pair is of prime importance in depth map generation, I recommend going into "Edit->Preferences->Adjustment" and checking the box that says "Better Precision (slow)". As I don't particularly like large images, the first thing I do after loading the stereo pair is to resize the images to something smaller. In this guide, the stereo pair is an mpo coming from a Fuji W3 camera. The initial dimensions are 3441x2016. You could generate the depth maps using those dimensions but everything is gonna take longer. Clicking on "Edit->Resize", I change the width to 1200. I guess I could have resized to something larger but I wouldn't resize to anything larger than 3000 pixels. Once the stereo pair has been resized, I click on "Adjust->Auto-alignment" to align/rectify the images. As an alternative to SPM's alignment tool, you can use my rectification tools: Epipolar Rectification 9b (ER9b) or Epipolar Rectification 9c (ER9c).

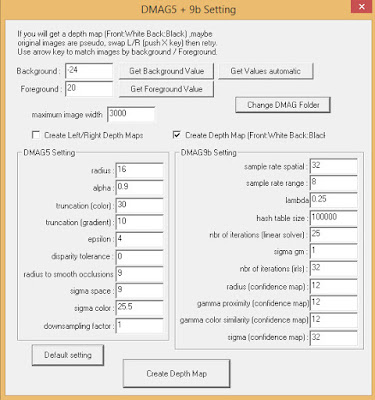

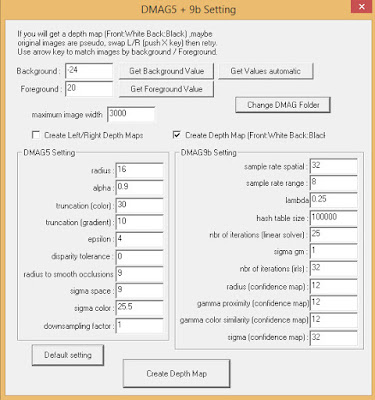

To generate the depth map, I click on "Edit->Depth Map->Create depth map from stereo pair" where I am presented with this window:

What I recommend doing is getting the background and foreground disparity values manually first. To get the background value, use the arrow keys so that the two red/cyan views come into focus (merge) for a background point. Same idea for the foreground value. I keep track of those two values and then click on "Get Values (automatic)". If the automatic values make sense when compared to the ones obtained manually, I just leave them be. If they don't, I edit them and put back the values obtained manually. Since my image width is less than 3000 pixels, I don't bother with the "maximum image width" box. I don't like the idea of SPM resizing the images automatically so I always make sure that my image width is less than what it's in the "maximum image width" box. The reason I don't like the idea of SPM resizing my images is because, if you change the image dimensions, you are supposed to also change the min and max disparity, and I am not sure SPM does it. So, beware! As an alternative to having SPM compute the min and max disparities, you can use my rectification tools: Epipolar Rectification 9b (ER9b) or Epipolar Rectification 9c (ER9c). They both give you the min and max disparities in their output. I only want the left depth map so I keep the "Create left/right depth maps" unchecked. Since I want white to be for the foreground and black for the background (I always use white for the foreground in my depth maps), I click on "Create depth map (front: white, back: black)". Note that because I clicked on "Create depth map (front: white, back: black)", when it is time to save the depth map by going into "Edit->Depth map->Save as Facebook 3d photo (2d photo+depth)", I will need to click on "white" for the "Displayed depth map front side->white" so that the depth map does not get inverted when saved by SPM. If you don't mind a depth map where the foreground is black, then leave the "Create depth map (front: white, back: black)" radio button alone and don't click on it.

I recommend using the default parameters in DMAG5 (on the left) and DMAG9b (on the right) to get the first depth map (as you may need to tweak the parameters to get the best possible depth map). To be sure I have the default settings (SPM stores the latest used settings), I click on "Default settings". To get the depth map, I click on "Create depth map". This is the result:

To get the left image on the left and the depth map on the right, click on the "Side-by-side" icon in the taskbar. For more info on DMAG5, check Depth Map Automatic Generator 5 (DMAG5). For more info on DMAG9b, check Depth Map Automatic Generator 9b (DMAG9b) and/or have a look at dmag9b_manual.pdf in the dmag5_9 directory.

Now, if you go into the dmag5_9 directory, you will find some very interesting intermediate images:

- 000_l.tif. That's the left image as used by DMAG5.

- 000_r.tif. That's the right image as used by DMAG5.

- con_map.tiff. That's the confidence map computed by DMAG9b. White means high confidence, black means low confidence. If you clicked on "Create left/right depth maps", the confidence map gets overwritten when DMAG9b is called to optimize the right depth map.

- dps_l.tif. That's the left depth map produced by DMAG5.

- dps_r.tif. That's the right depth map produced by DMAG5. Even if the "Create left/right depth maps" box is unchecked, the right depth map is always generated by DMAG5 in order to detect left/right inconsistencies in the left depth map.

- out.tif. That's the depth map produced by DMAG9b. DMAG9b uses the depth map generated by DMAG5 as input and improves it. If you clicked on "Create left/right depth maps", there should be out.tif (left depth map) and out_r.tif (right depth map).

The depth map "out.tif" is basically the same as the depth map that you get by clicking on "Edit->Depth map->Save as Facebook 3D photo (image+depth)".

It should be noted that once you have created a depth map, you cannot re-click on "Edit->Depth map->Create depth map from stereo pair", possibly change parameters, and create another depth map. If you do that, the new depth map is basically going to be garbage because the right image has been replaced by the previously created depth map (take a look at 000_r.tif in the dmag5_9 directory to convince yourself). To re-generate the depth map, you need to undo or reload the stereo pair.

At this point, you may want to play with the DMAG5/DMAG9b parameters to see if you can improve the depth map. I think it's a good idea but be aware there may be areas in your image where the depth map cannot be improved upon. For instance, if you have an area that has no texture (think of a blue sky or a white wall) or an area that has a repeated texture, it is quite likely the depth map is gonna be wrong no matter what you do. So, improving the depth map is not always easy as, usually, some areas will get better while others will get worse. Some parameters like DMAG5 radius or DMAG9b sample rate spatial depend on the image dimensions and should kind of be tailored to the image width. I think it's best to change one parameter at a time and see the effect it has on the depth map. If things get better, keep changing that parameter (in the same direction) until things get worse. Then, you tweak the next parameter. Of course, it is up to you whether or not you want to spend the time tinkering with parameters. If you always shoot with the same camera setup, this tinkering may only need to be done once.

Let's see which parameters used by DMAG5 and DMAG9b are worth tinkering with in the quest for a better depth map. I believe changing the sample rate spatial used by DMAG9b from the default 32 to 16 or even 8 should be the first thing to change when trying to improve the depth map. I think the default 32 is probably too large especially if the image width is not that large (like here for our test case).

What DMAG5 parameters (on the left side of the window) give you the most bang for your bucks when trying to improve the mesh quality?

- radius. The larger the radius, the smoother the depth map generated by DMAG5 is going to be but the less accurate. As the radius goes down, more noise gets introduced into the depth map.

- downsampling factor. The larger the downsampling factor, the faster DMAG5 will run but the less accurate. Running DMAG5 using a downsampling factor of 2 is four times faster than running DMAG5 using a downsampling factor of 1 (no downsampling).

Note that those observations concern DMAG5 only. So, you should look at the dps_l.tif file to see the effects of changing DMAG5 parameters. Also, you may find that sometimes the depth map generated by DMAG5 is actually better than the one generated by the combo DMAG5/DMAG9b. Note that DMAG9b can be so aggressive that variations in the depth map produced by DMAG5 do not matter much.

What happens to the left depth map generated by DMAG5 if you change the radius from 16 to 8 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG5 radius from 16 to 8 (every other parameter set to default).

Left depth map generated by DMAG5 (dps_l.tif). Changed DMAG5 radius from 16 to 8 (every other parameter set to default).

What happens to the left depth map generated by DMAG5 if you change the downsampling factor from 2 to 1 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG5 downsampling factor from 2 to 1 (every other parameter set to default).

Left depth map generated by DMAG5 (dps_l.tif). Changed DMAG5 downsampling factor from 2 to 1 (every other parameter set to default).

What DMAG9b parameters (on the right side of the window) give you the most bang for your bucks when trying to improve the mesh quality?

- sample rate spatial. The larger the sample rate spatial, the more aggressive DMAG9b will be. I recommend going from 32 (default value) to 16, 8, and even 4. If you can clearly see "blocks" in your depth map, the sample rate spatial is probably too large and should be reduced (by a factor of 2).

- sample rate range. The larger the sample rate range, the more aggressive DMAG9b will be. The default value is 8 but you can try 4 or 16 and see if it's any better.

- lambda. The larger the lambda, the smoother the depth map is going to be (in other words, the more aggressive DMAG9b will be). The default value is 0.25 but you can certainly try larger or smaller values. If you don't want the output depth map to be too different from the depth map generated by DMAG5 (dps_l.tiff), use smaller values for lambda (you will probably also need to use smaller values for the sample rate spatial and the sample rate range).

What happens to the depth map generated by DMAG9b if you change the sample rate spatial from 32 to 16 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG9b sample rate spatial from 32 to 16 (every other parameter set to default).

Depth map generated by DMAG9b (out.tif). Changed DMAG9b sample rate spatial from 32 to 16 (every other parameter set to default).

What happens to the depth map generated by DMAG9b if you change the sample rate range from 8 to 4 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG9b sample rate range from 8 to 4 (every other parameter set to default).

Depth map generated by DMAG9b (out.tif). Changed DMAG9b sample rate range from 8 to 4 (every other parameter set to default).

What happens to the depth map generated by DMAG9b if you change lambda from 0.25 to 0.5 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG9b lambda from 0.25 to 0.5 (every other parameter set to default).

Depth map generated by DMAG9b (out.tif). Changed DMAG9b lambda from 0.25 to 0.5 (every other parameter set to default).

Video version of this post about generating depth maps in SPM:

Another shorter video:

Now, if you want to manually edit/fix the generated depth map, you can do so in SPM by clicking on "Edit->Depth map->Correct depth map". If you want to edit the generated depth map semi-automatically, you can use the techniques centered around DMAG11 or DMAG4 that are described in Case Study - How to get depth maps from old stereocards using ER9c, DMAG5, DMAG9b, and DMAG11 and Case Study - How to improve depth map quality with DMAG9b and DMAG4.

For the ultimate experience in editing depth maps semi-automatically, I recommend using 2d to 3d Image Conversion Software - the3dconverter2: load up the left image and the depth map (add an alpha channel if it does not have one), use the eraser tool to delete parts in the depth map you do not like (that becomes sparse_depthmap_rgba_image.png needed by the3dconverter), create a new layer and trace where you don't want the depths to bleed through (that becomes edge_rgba_image.png needed by the3dconverter), and run the3dconverter to get the new depth map called dense_depthmap_image.png. You do not to worry or care about gimp_paths.svg, ignored_gradient_rgba_image.png, and emphasized_gradient_rgba_image.png as they are not needed.

Video tutorial that explains how to use the3dconverter2 to edit depth maps:

I recommend using my stereo tools directly but I understand the convenience StereoPhoto Maker might offer, which is why I wrote this post. The issue I have with StereoPhoto Maker is the rectifier which not only aligns the stereo pair but also can provide the min and max disparities needed by the automatic depth map generator. I have no control over that part in StereoPhoto Maker and that bothers me a little bit. Just be aware that, to get good depth maps, it is quite important to have a properly rectified stereo pair and correct min and max disparities.

Be extremely careful about accepting the min and max disparity values (aka the background and foreground values) that StereoPhoto Maker can automatically provide! I strongly recommend you do that part manually. If the values differ a lot (the ones found automatically and the ones found manually), I would be quite suspicious about the proper rectification/alignment of the stereo pair. In that case, I would suggest using ER9b or ER9c to rectify, or if you are really keen on using StereoPhoto Maker, checking the "Better Precision (slow)" box under "Edit->Preferences->Adjustment" to get better rectification. Also, be extremely careful about StereoPhoto Maker resizing your stereo pair because of what's in "maximum image width"! I would not let StereoPhoto Maker resize the stereo pair and instead make sure that the stereo pair you are processing is under what's in "maximum image width".

Alrighty then, let's get back to the business hand, that is, generating depth maps with StereoPhoto Maker. I am assuming that you have installed StereoPhoto Maker and the combo DMAG5/DMAG9b on your computer. If you haven't done so yet, follow the instructions in How to make Facebook 3D Photo from Stereo pair. I am assuming you have downloaded and extracted the DMAG5/DMAG9b stuff in a directory called dmag5_9. That directory should look like so:

Because alignment of the stereo pair is of prime importance in depth map generation, I recommend going into "Edit->Preferences->Adjustment" and checking the box that says "Better Precision (slow)". As I don't particularly like large images, the first thing I do after loading the stereo pair is to resize the images to something smaller. In this guide, the stereo pair is an mpo coming from a Fuji W3 camera. The initial dimensions are 3441x2016. You could generate the depth maps using those dimensions but everything is gonna take longer. Clicking on "Edit->Resize", I change the width to 1200. I guess I could have resized to something larger but I wouldn't resize to anything larger than 3000 pixels. Once the stereo pair has been resized, I click on "Adjust->Auto-alignment" to align/rectify the images. As an alternative to SPM's alignment tool, you can use my rectification tools: Epipolar Rectification 9b (ER9b) or Epipolar Rectification 9c (ER9c).

To generate the depth map, I click on "Edit->Depth Map->Create depth map from stereo pair" where I am presented with this window:

What I recommend doing is getting the background and foreground disparity values manually first. To get the background value, use the arrow keys so that the two red/cyan views come into focus (merge) for a background point. Same idea for the foreground value. I keep track of those two values and then click on "Get Values (automatic)". If the automatic values make sense when compared to the ones obtained manually, I just leave them be. If they don't, I edit them and put back the values obtained manually. Since my image width is less than 3000 pixels, I don't bother with the "maximum image width" box. I don't like the idea of SPM resizing the images automatically so I always make sure that my image width is less than what it's in the "maximum image width" box. The reason I don't like the idea of SPM resizing my images is because, if you change the image dimensions, you are supposed to also change the min and max disparity, and I am not sure SPM does it. So, beware! As an alternative to having SPM compute the min and max disparities, you can use my rectification tools: Epipolar Rectification 9b (ER9b) or Epipolar Rectification 9c (ER9c). They both give you the min and max disparities in their output. I only want the left depth map so I keep the "Create left/right depth maps" unchecked. Since I want white to be for the foreground and black for the background (I always use white for the foreground in my depth maps), I click on "Create depth map (front: white, back: black)". Note that because I clicked on "Create depth map (front: white, back: black)", when it is time to save the depth map by going into "Edit->Depth map->Save as Facebook 3d photo (2d photo+depth)", I will need to click on "white" for the "Displayed depth map front side->white" so that the depth map does not get inverted when saved by SPM. If you don't mind a depth map where the foreground is black, then leave the "Create depth map (front: white, back: black)" radio button alone and don't click on it.

I recommend using the default parameters in DMAG5 (on the left) and DMAG9b (on the right) to get the first depth map (as you may need to tweak the parameters to get the best possible depth map). To be sure I have the default settings (SPM stores the latest used settings), I click on "Default settings". To get the depth map, I click on "Create depth map". This is the result:

To get the left image on the left and the depth map on the right, click on the "Side-by-side" icon in the taskbar. For more info on DMAG5, check Depth Map Automatic Generator 5 (DMAG5). For more info on DMAG9b, check Depth Map Automatic Generator 9b (DMAG9b) and/or have a look at dmag9b_manual.pdf in the dmag5_9 directory.

Now, if you go into the dmag5_9 directory, you will find some very interesting intermediate images:

- 000_l.tif. That's the left image as used by DMAG5.

- 000_r.tif. That's the right image as used by DMAG5.

- con_map.tiff. That's the confidence map computed by DMAG9b. White means high confidence, black means low confidence. If you clicked on "Create left/right depth maps", the confidence map gets overwritten when DMAG9b is called to optimize the right depth map.

- dps_l.tif. That's the left depth map produced by DMAG5.

- dps_r.tif. That's the right depth map produced by DMAG5. Even if the "Create left/right depth maps" box is unchecked, the right depth map is always generated by DMAG5 in order to detect left/right inconsistencies in the left depth map.

- out.tif. That's the depth map produced by DMAG9b. DMAG9b uses the depth map generated by DMAG5 as input and improves it. If you clicked on "Create left/right depth maps", there should be out.tif (left depth map) and out_r.tif (right depth map).

The depth map "out.tif" is basically the same as the depth map that you get by clicking on "Edit->Depth map->Save as Facebook 3D photo (image+depth)".

It should be noted that once you have created a depth map, you cannot re-click on "Edit->Depth map->Create depth map from stereo pair", possibly change parameters, and create another depth map. If you do that, the new depth map is basically going to be garbage because the right image has been replaced by the previously created depth map (take a look at 000_r.tif in the dmag5_9 directory to convince yourself). To re-generate the depth map, you need to undo or reload the stereo pair.

At this point, you may want to play with the DMAG5/DMAG9b parameters to see if you can improve the depth map. I think it's a good idea but be aware there may be areas in your image where the depth map cannot be improved upon. For instance, if you have an area that has no texture (think of a blue sky or a white wall) or an area that has a repeated texture, it is quite likely the depth map is gonna be wrong no matter what you do. So, improving the depth map is not always easy as, usually, some areas will get better while others will get worse. Some parameters like DMAG5 radius or DMAG9b sample rate spatial depend on the image dimensions and should kind of be tailored to the image width. I think it's best to change one parameter at a time and see the effect it has on the depth map. If things get better, keep changing that parameter (in the same direction) until things get worse. Then, you tweak the next parameter. Of course, it is up to you whether or not you want to spend the time tinkering with parameters. If you always shoot with the same camera setup, this tinkering may only need to be done once.

Let's see which parameters used by DMAG5 and DMAG9b are worth tinkering with in the quest for a better depth map. I believe changing the sample rate spatial used by DMAG9b from the default 32 to 16 or even 8 should be the first thing to change when trying to improve the depth map. I think the default 32 is probably too large especially if the image width is not that large (like here for our test case).

What DMAG5 parameters (on the left side of the window) give you the most bang for your bucks when trying to improve the mesh quality?

- radius. The larger the radius, the smoother the depth map generated by DMAG5 is going to be but the less accurate. As the radius goes down, more noise gets introduced into the depth map.

- downsampling factor. The larger the downsampling factor, the faster DMAG5 will run but the less accurate. Running DMAG5 using a downsampling factor of 2 is four times faster than running DMAG5 using a downsampling factor of 1 (no downsampling).

Note that those observations concern DMAG5 only. So, you should look at the dps_l.tif file to see the effects of changing DMAG5 parameters. Also, you may find that sometimes the depth map generated by DMAG5 is actually better than the one generated by the combo DMAG5/DMAG9b. Note that DMAG9b can be so aggressive that variations in the depth map produced by DMAG5 do not matter much.

What happens to the left depth map generated by DMAG5 if you change the radius from 16 to 8 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG5 radius from 16 to 8 (every other parameter set to default).

Left depth map generated by DMAG5 (dps_l.tif). Changed DMAG5 radius from 16 to 8 (every other parameter set to default).

What happens to the left depth map generated by DMAG5 if you change the downsampling factor from 2 to 1 (every other parameter set to default)? Let's find out!

"Create depth map from stereo pair" window. Changed DMAG5 downsampling factor from 2 to 1 (every other parameter set to default).

Left depth map generated by DMAG5 (dps_l.tif). Changed DMAG5 downsampling factor from 2 to 1 (every other parameter set to default).

What DMAG9b parameters (on the right side of the window) give you the most bang for your bucks when trying to improve the mesh quality?

- sample rate spatial. The larger the sample rate spatial, the more aggressive DMAG9b will be. I recommend going from 32 (default value) to 16, 8, and even 4. If you can clearly see "blocks" in your depth map, the sample rate spatial is probably too large and should be reduced (by a factor of 2).

- sample rate range. The larger the sample rate range, the more aggressive DMAG9b will be. The default value is 8 but you can try 4 or 16 and see if it's any better.

- lambda. The larger the lambda, the smoother the depth map is going to be (in other words, the more aggressive DMAG9b will be). The default value is 0.25 but you can certainly try larger or smaller values. If you don't want the output depth map to be too different from the depth map generated by DMAG5 (dps_l.tiff), use smaller values for lambda (you will probably also need to use smaller values for the sample rate spatial and the sample rate range).