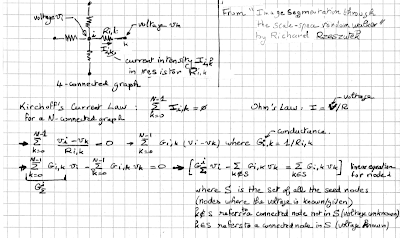

Now, how do you solve the "Random Walker" problem? Well, one way of doing so is by using an electrical circuit analogy. Given a region S, ground all seeded pixels that are not associated with S and put a voltage of unity to all seeded pixels associated with S, and solve for the unknown voltages using Kirchoff's Current Law and Ohm's Law. It's a linear system of equations which can be solved rather easily. In all cases, the obtained voltages are the probabilities we were discussing just above. The following pictures kinda explain the main points of the method. A great deal comes from Richard's Rzeszutek Masters Thesis entitled "Image Segmentation through the Scale Space Random Walker".

Using Kirchoff's Current Law and Ohm's Law to obtain the equation each graph node (pixel) must satisfy.

Well, we can use the exact same methodology to do semi-automatic depth map generation for 2D to 3D image conversion. Again, the user is asked to work a little, but this time, he/she has to put down some depth values (shades of gray) using brush strokes, creating a sparse depth map. The depth values which vary from 0 to 255 (the grayscale range) are converted into voltages which vary from 0 to 1. Just like with image segmentation, using Kirchoff's Current Law and Ohm's Law, we obtain a system of linear equations, which, when solved, give the unknown voltages at the pixels that were not painted. Multiply the voltage by the grayscale range and you get a grayscale intensity or depth.

Random Walks, as is, is quite sensitive to noise, not unlike like many computer vision methodologies. To alleviate this problem, the Ryerson team uses a scale space approach which they call SSRW (Scale Space Random Walks) as opposed to plain RW (Random Walks). In the scale space approach, the original image is smoothed (convolved by an isometric Gaussian kernel) as many times as desired. By stacking the images on top of each other, the graph is given an extra dimension to make it a 6-connected three-dimensional graph. This apparently improves the quality of the depth maps produced but it comes at a price: the number of equations to solve is multiplied by the number of (smoothing) levels. See "Image Segmentation using Scale-Space Random Walks" by Richard Rzeszutek, Thomas El-Maraghi, and Dimitrios Androutsos for more info on SSRW.

The following pictures were taken from "Semi-Automatic 2D to 3D Image Conversion Using Scale-Space Random Walks and a Graph Cuts Based Depth Prior" by R. Phan, R. Rzeszutek and D. Androutsos to illustrate the SSRW process:

That is Clever Clever Clever! Two simple variables, Shade and Placement inform Depth and Segmentation, I would dd a third variable, a small simple arc -say for instainstance, laterally across that pole in the front, which would add a Horizontal Gradient to the solution for that segment, (relative to the chosen color and the angle of the arc) to inform >>>3D Roundness<<< Eliminating the Flat CardBoard Cutout effect. That may even fool the eyes of Native Stereoscopic Film Purists such as myself and eliminate theneed for sites such as RealorFake3D.com where the films are listed as Native Filmed 3D and which are Converted after the fact. For a Face you could do as little as 5 quick arcs, overall on top at the firhead, small one at the eyes and nose and a medium one at the chin. High angle on the nose informs a stronger gradient shallower gradient on the forehead a broad rounding effect. You could even make the arcs Non-Uniform Rational b-Splines offering the ability to affect the tangents after drawing the arc to perfect the way, say, the nose has a peaked gradient. Enjoy some StereoScopic Star Trek TOS CGI created by myself for S3D 'practice' ;-) http://www.youtube.com/watch?v=sleY7hmsYOY

ReplyDeleteThank You, Amazing work and ideas!

Robert: if you consider, let's say, the forehead of a face and you consider the change of depth in the horizontal direction, you have a non-linear gradient (it varies slowly starting from the center of the head and then recedes very quickly). Unfortunately, and unless i am missing something, it's very had to model a non-linear gradient in photoshop or gimp. i have used "curves" but it's really not very precise. on top of that, for the forehead, you also need a gradient in the vertical direction. this can get pretty complex and i am not sure it's worth doing.

DeleteWell.. Studios are used to paying as much as $100,000 per Minute of top tier conversion, it's already worth Hand-Drawing / Rotoscoping on my Wacom Cintiq at that price, much less finding out a method of automation. :) Non Uniform b-Spline MEANS non-linear, one arc provides 3 variable spreads, the center curve angle and the curve angle at each end of the spline (enough information to make as complex a gradient as needed), adding additional 'weighted' points would give even more control! ---Perhaps for the vertical, make a Cross or even Lattice of spline arcs, Even now, using non-uniform / adjusted tension splines (Visually I mean) in After Effects, Autodesk FLARE, etc. ALREADY is a thousand times more efficient than hand drawing every frame in grayscale! (especially with auto tracking) With any amount of your amazing mathematical interpolation to make the process that much smarter / automated (even procedural!) Surely there is room in the conversion world for some hybrid of our two distinct methods!! :~) Thanks for the response

Deletei totally understand what you mean. I am however relying on Photoshop or Gimp or any other free program to provide the "sparse" depth map to interpolate and it's a bit lacking. i have 0 experience with AE, and any of the prop programs like flare, maya, etc. Basically, at a minimum, one would need a (free) program that can draw a "depth" curve (spline, bezier, whatever) along a line segment anywhere in the 2d picture and see the 2d image deform in real time along that curve according to the depth. Not too hard to do in terms of programming but it still would require quite a bit of skill from the user (not everybody is a sculptor even if you are just sculpting along a line.) Also, it might be easier to go all out and just use a 3d sculpting program like z-brush or sculptris (free) to render a single scene like a portrait. thanks for your input.

DeleteHi, first of all I want to thank you for making this resources available to the public. Recently I was reading about semi-automatic conversion and I found this paper http://zurich.disneyresearch.com/~owang/data/stereobrush_sbim2011_final_opt.pdf that uses a discontinous approach to produce a less smooth depthmap and preserve the edges. Also, have you think about the posibility of implement the warping technique mentioned in this paper to fill disoccluded areas?

ReplyDeleteHI: I remember reading that article. I chose to implement the method described in this post (DMAG4) because it was probably simpler. When you have continuous depth changes in one object like a person's face, it's really difficult to steer the automatic converter in the right direction because you have to use depth gradients (the painting part) in two directions and hope the automatic converter will get it right. Basically, the user has to be a sculptor using only shades of gray to sculpt, this is extremely difficult. Of course, if the picture is "cartoony" and each object is at a given depth (cardboard 3-d), then it's much easier.

DeleteThanks, for answering. But recently I discovered that the hole filling technique described in the paper is not useful with abrupt changes in the depth information. For example if the scene has an object moving from foreground to background multiple times, it will cause the background to stretch and expand to fill the holes in a very unpleasant way.

DeleteWhich paper? The one in the post or the one you are mentioning. The technique described in the post assumes that objects at different depths have different colors. If they don't, then there's gonna be "fattening" of the objects. I am not sure how you can alleviate this since most of these algorithms are based on the paradigm "color change = likely disparity change".

DeleteDisney Research Paper, not yours! Sorry! The issue i describe is when you apply the hole filling technique from the Disney Research paper > (read previous reply)

DeleteI explained in the reply of July 7, 2014 at 12:45 PM why I did not implemented the method.

Delete